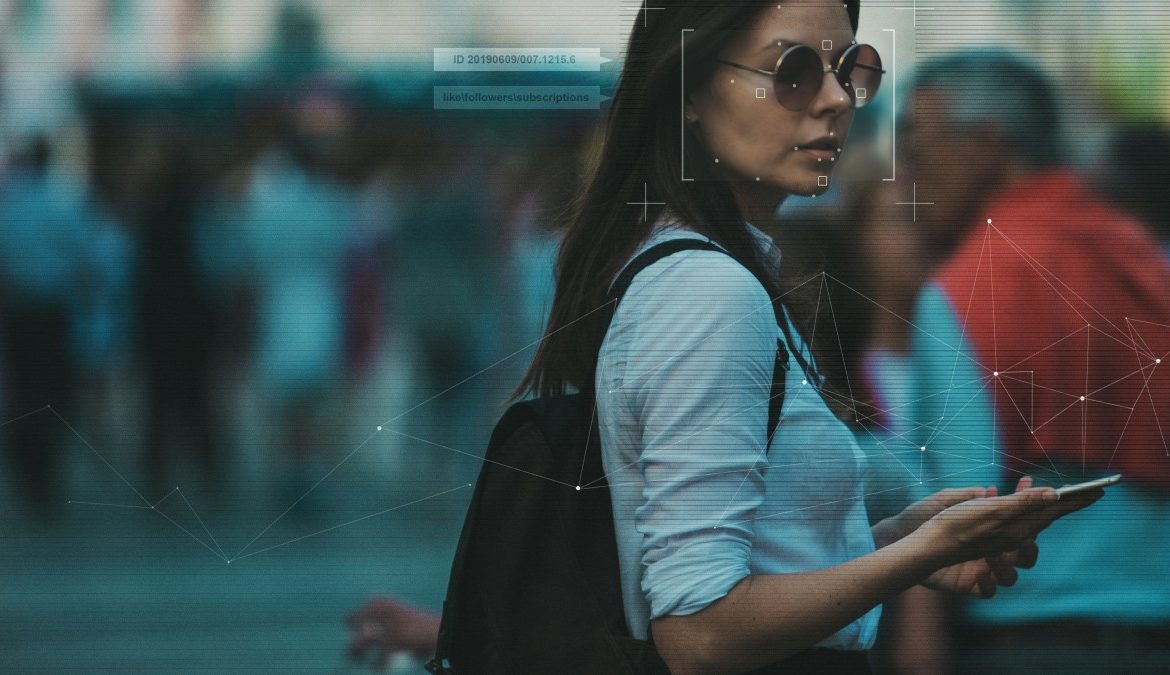

Research suggests that Amazon’s facial analysis algorithms have been struggling with racial and gender bias. The MIT MEDIA Lab found out that to correctly pinpoint the gender of lighter-skinned men, recognition had no trouble at all. Nevertheless, it classified women as men almost a fifth of the time and darker-skinned women as men on nearly one out of three occasions. Microsoft software and IBM outperformed Amazon’s tool. Microsoft’s solution made a mistake 1.5 percent of the time when it thought darker-skinned women were men.

MIT’s tests took place throughout 2018. Nevertheless, Amazon has disputed the results of MIT’s tests. The company argued that researchers hadn’t used the current version of Rekognition. They also said that the gender identification test used facial analysis (it picks out faces from images and assigning generic attributes to them), rather than facial recognition, which is looking for a match of a specific face. They noted that they are distinct software packages.

Matt Wood is a general manager of deep learning and artificial intelligence at Amazon Web Services. He told VentureBeat that they recommend using an up-to-date version of Amazon Rekognition. Because when they retrieved from parliamentary websites and the Megaface similar dataset of images, they had a result of exactly zero mistakes matched with the recommended 99% confidence limit.

It is not the first time where such software is under fire. Stanford researchers and MIT said that last February, three facial analysis programs exhibited similar skin color and gender bias. IBM, since then, has released a dataset; it thinks it must improve accuracy in facial analysis tools. Meanwhile, Microsoft has called for even more regulations of the tech to maintain high standards.

Facial Recognition

Meanwhile, a group of lawmakers asked Amazon for answers overs its decision supplying Rekognition to law enforcement, in November. It was because the company’s response to an earlier letter was deemed to be insufficient. Amazon also pitched Rekognition To Immigration and Customs Enforcement (ICE). Nevertheless, some of the shareholders have asked the company to stop selling the tech. They think that it might violate the civil rights of the people.

Two years ago, ACLU discovered that Orlando’s cops were using Amazon’s controversial system of Rekognition facial detection. Nevertheless, police chief John Mina said they were only testing the software at their headquarters. After that, at a news conference, Mina admitted that three of the city’s IRIS cameras downtown were also equipped with the software. But he insisted that despite the presence of Rekognition in public cameras, it can still only track the seven officers who volunteered to test the system. Mina admitted that in the future, they might use the software to track individuals, but they are far away from those times.

He also added they test new equipment all the time. For example, new shields, new vests, new guns, new things for police cars are going through the test all the time. Nevertheless, it does not mean that they are going to go with that particular product. They just want to see if the new product works appropriately.